27 December 2021, 18:08 (Published)

Proportionally Cropping Rotated Images (Part 1)

Filling in a Core Image gap with pen, paper & elementary school mathematics

Being the crafty Mac or iOS developer that you are, with Swift and Apple frameworks as your weapons of choice, how would you straighten up a pile of images taken by some severely horizon-challenged photographer?

By way of an example, let's consider this photo of a country road bathing in the Tuscan afternoon sun, one fine day in September. The amateur shooter appears to have attempted flight in one of da Vinci's leather-winged contraptions, capturing the horizon at a displeasingly slanted angle:

Dusting off your trusty Xcode 13, you will be tempted to try out Core Image's built-in CIStraightenFilter. Observing that a 3.5° rotation, counterclockwise, produces an acceptable result, you set the inputAngle argument to 0.06108652 radians, and call it a day:

The problem

Alas, not so fast! While implementing Sashimi's image straightening tool, it quickly became obvious that CIStraightenFilter will not work for all the use cases needed there. It is documented to work like this:

The image is scaled and cropped so that the rotated image fits the extent of the input image.

In other words, straightening a 6000⨉4000 image will return a CIImage instance with a 6000⨉4000 extent. At first glance, this seems convenient, and for merely displaying the image in a simple UI, it probably often is.

In an app like Sashimi, however, the need to crop the rotated image into rectangular shape presents a problem. What the CIStraightenFilter documentation doesn't fully spell out is that to keep the original image's extent, it needs to scale up the rotated image.

Suffice to say, the Sashimi image processing pipeline never scales image pixels up, for several reasons:

- When exporting a straightened image, for example when dragging images out to Finder, you would waste both disk space, in the form of unnecessarily large image files, and processing cycles doing the unnecessary scale operation. The scaling would produce no new image information, and instead have a result that the user almost certainly does not want.

- Through the caching and rendering pipeline for image representations to display in the Sashimi user interface, operating on scaled-up pixels would also waste memory and processing power. You might think that with the typical modest straightening angles this wouldn't matter in practice — but let's look at the math soon enough!

- Even with its focused feature 1.0 set, Sashimi is pro enough of an app that users straightening images will be interested in the dimensions of the final edited image: how much resolution remains after the crop? The app does show this in the inspector, and that places a further precision requirement on the full chain of image operations performed.

In summary: out with CIStraigtenFilter, and in with the basics: CIAffineTransform and CICrop!

The second problem

Hacking together an extension method for straightening a CIImage — ⚠️ without concern for proper error handling and all that, yet ⚠️ — might look something like this:

public extension CIImage {

func rotated(by radians: CGFloat) -> CIImage {

let transform = CGAffineTransform(rotationAngle: angle)

let filter = CIFilter(name: "CIAffineTransform")!

filter.setDefaults()

filter.setValue(self, forKey: kCIInputImageKey)

filter.setValue(transform, forKey: "inputTransform")

let rotatedImage = filter.outputImage!

let cropRect = … // Indeed, how do we get this?!

return rotatedImage.cropped(to: cropRect)

}

}

Uh oh.

Sifting through the documentation, and examining CIStraightenFilter in the debugger for inspiration, it does not look like there is any way to extract the crop rectangle from the Core Image API.

Searching the internet, then, is even worse: you will find several suggested solutions, but they are all either incomplete, incorrect or, most curiously, interested in the wrong thing: many people appear to want to calculate the maximum rectangle contained by the rotated image bounds, but none concerned with the proportional one, which is the only thing that makes sense here.

For completeness, hunting down computer graphics e-books, or even ones printed on fibers from dead trees, didn't yield a single option where the ToC would have suggested covering this topic.

So, let's solve it on our own, shall we. How hard can it be?

The Implicit Crop

I will confess that with this one filler of a sentence I am jumping over about a week's roadtrip from Vasto, Abruzzo, to Rome on the west coast, to Florence towards the north, and back to the east coast. 1352 kilometers of riding at the back of a Fiat van, trying to crack the proportional crop math required here.

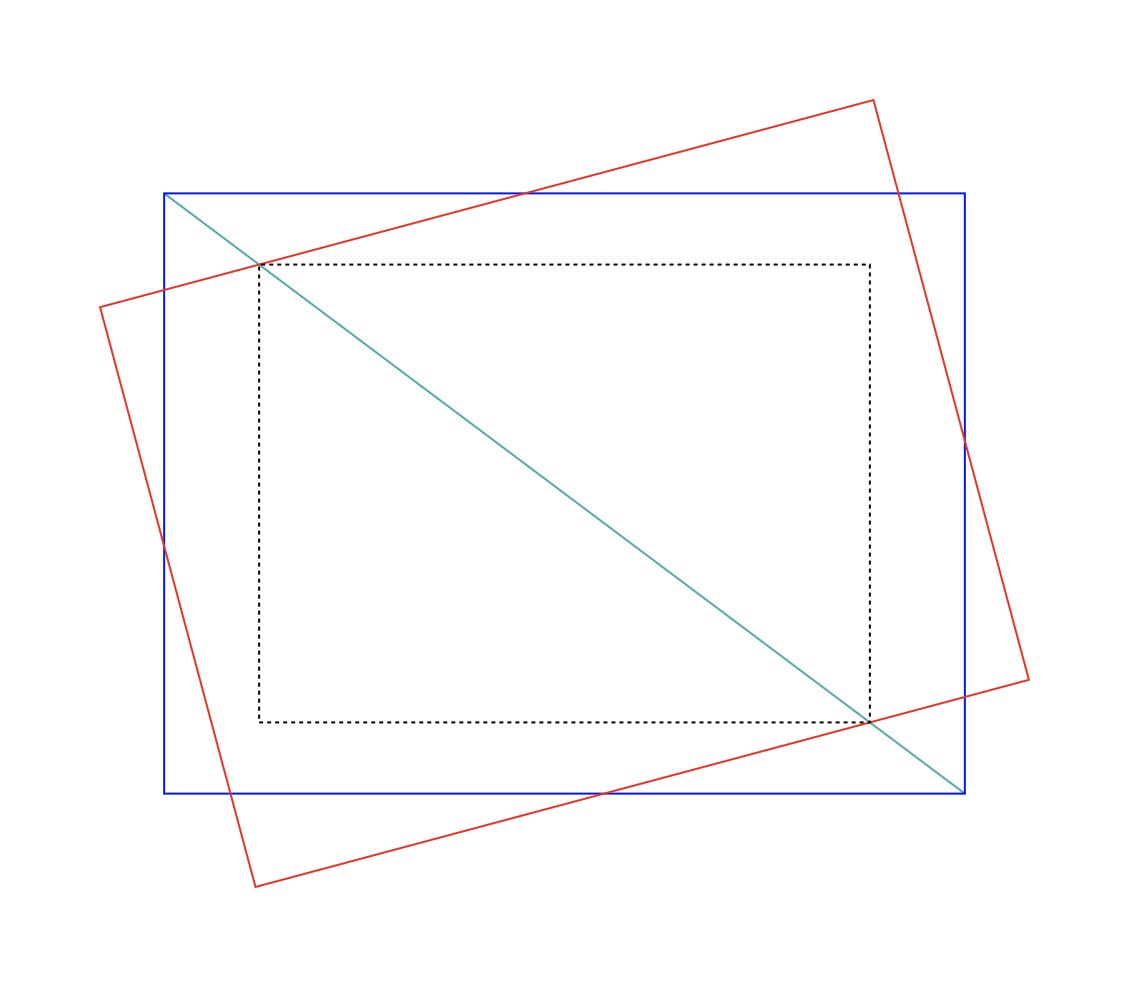

Fast forward over all the bumps in the road, the solution is in this diagram:

The blue rectangle represents the original image.

The red rectangle stands for the original image rotated to an angle, counterclockwise. This, incidentally, in all Apple frameworks I've dealt with, is the direction positive angle values go.

The black, dashed line is the proportional crop rectangle we ultimately want to extract out of a calculation.

Finally, the teal line from corner to corner is a a helper for determining which way we are holding things.

Before wrapping up this first instalment, one final hint: the answer lies right there in the corners.

With that, happy holidays! Ho ho ho. See you next year, where in Parts 2 and 3, there will be an algorithm, and a Swift implementation.